Secure static websites on AWS with Terraform & GitHub Actions

It was time to rehaul my blog - Gatsby, Netlify and the mess of moving between them made me want to handle my own infrastructure. I also sucked at React and JS, so I decided to start afresh.

My goal was to do, from scratch, the things I wanted to learn, and use templates for the rest, so I created a static website using Zola and a theme I found from their showcase.

This blog covers the messier part - setting up the infrastructure needed to host that blog on the web - using AWS, Terraform & GHA.

If you're interested in learning more about architecting solutions on AWS, check out popular reading - AWS for Solution Architects or The Self-Taught Cloud Computing Engineer

Before you start

There are a few things this post assumes you already know (or atleast the basics-of).

A good starting point will be knowing what cloud computing / AWS is, creating an account & then understanding why you shouldn't be clicking a single button on the AWS console.

You will, however, need to do this once as we need to store the terraform state file in a bucket - go ahead and create an empty S3 bucket on the AWS console which we will be using later on as our backend bucket.

GitHub Actions isn't too complicated and will be used minimally - if you prefer any other form of CD that's up to you.

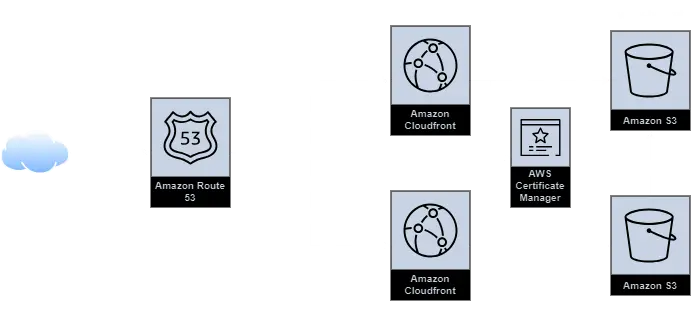

Architecture

A high level flow of what we want to be designing looks like this.

This architecture is mostly whatever you can find as AWS' suggested way of hosting a static website, it would consist of:

- A DNS Service to route the incoming traffic to your domain

- A CDN to efficiently serve the data of your site with HTTPS enabled using a certificate

- A Storage Solution to put the static contents of your website

Note

If you are wondering why we need 2 buckets, you can understand that from the mdn docs.

verbatim :

It is good advice to do this [two domains] since you can't predict which URL users will type in their browser's URL bar.You can choose to reverse the diagram and make the non-www domain as your canonical domain and your www domain redirect to it.

Initial terraform setup

In the below

.tffiles, wherever you see text enclosed in<>, use your own values (without<>)

We need to specify the providers we are going to use - let's do so with a providers.tf file.

Warning

The regions are kept separate for some flexibility with ACM & the rest of your infrastructure - ACM needs to be in us-east-1 to work with CloudFront, if you are trying to play around with different regions make sure to go through the documentation on AWS first

Going forward, I assume you already have a custom domain name, if not you can either set it up on AWS or any other domain registrars.

Some variables to be used across our infra will be specified in variables.tf

with values in terraform.tfvars

domain_name = "<your-website.com>"

bucket_name = "<your-website.com>"

common_tags =

S3

We start off by making our S3 resources - 2 buckets, one for the canonical domain (www in my case) and one for redirecting the non-canonical domain to the canonical domain.

Note

There are a few differences from what you will find in the AWS guide

- We have a CORS configuration for the canonical endpoint, which is because of a small issue with CloudFront - I put this as a precautionary measure to improve performance

- We have some configuration for an IAM policy - this is because I used the newer OAC method for authorizing CloudFront to access S3 instead of the legacy OAI.

Create s3.tf with the following content

# canonical s3

# non-canonical s3

ACM & CloudFront

We next need to setup a CloudFront distribution for some basic DDoS protection & enabling HTTPS.

For enabling HTTPS, let's first setup an SSL certificate to be used on CloudFront using ACM.

Create acm.tf with the following content

Warning

When creating a certificate, AWS will send a confirmation email before the rest of your terraform script runs.

There's a good chance that, like me, your domain registrar has enabled privacy protection for your domain - in which case if you are using

Next up, let's create cloudfront.tf

Note

There are a few differences from what you will find in the AWS guide

- We have an

aws_cloudfront_function, you can find thejscode for the function below. This is because of the way CloudFront's root objects work which can be fixed in a variety of ways. I chose the one which was least-hackiest in my eyes, which is also one that AWS suggests.- We are not using OAI (legacy), instead we are using OAC.

- We have a

custom_error_responsefor handling 404s gracefully.

# cloudfront distribution for canonical domain

# cloudfront distribution for non-canonical domain

The code for the function used above needs to reside in functions/redirect-subdir.js

Route53

Last but not the least, we need to get away from the ugly CloudFront URL and put our own custom domain there - let's set this up with route53.tf

After your Route53 zone get's created, we need to configure your domain settings to point to Route53's nameservers. Based on where you have your domain registered, this step can vary - go through this for a starting point.

For applying changes, make an AWS IAM User with permissions for all the resources made here & set it up in your CLI

Once we have all of this in place, a quick little terraform init & terraform apply and a bit of patience should get you up an running. (don't forget to approve your SSL certificate request!)

You can optionally automate this step - I found it to be overkill as I didn't see myself changing the infrastructure a lot once I had it up and running. HashiCorp has some documentation to help you get started if you are using GHA.

Hosting your content

Once your infrastructure is in place, you need to put your static website files in your S3 bucket, and this is probably something you will be changing frequently - so let's automate it!

For my case, I had a Zola website & their documentation had an example pipeline for GHA. Your pipeline might vary based on how you created your static website. Apart from the build step below, everything should be the same.

name: Build and Publish to AWS S3

on:

push:

branches:

- master

jobs:

run:

runs-on: ubuntu-latest

timeout-minutes: 10

steps:

- uses: actions/checkout@v3

- uses: taiki-e/install-action@v2

with:

tool: [email protected]

- name: Build

run: zola build

- uses: reggionick/s3-deploy@v4

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_DEFAULT_REGION: us-east-1

with:

folder: public

bucket: <canonical-bucket-name>

private: true

bucket-region: us-east-1

dist-id: ${{ secrets.CLOUDFRONT_DISTRIBUTION_ID }}

invalidation: /*

With that you should be able to access your website in HTTPS on your canonical domain.

Till the next blog, adieu.